Back to the Future-Proof: “Even-If” Engineering for Trustworthy, Resilient AI

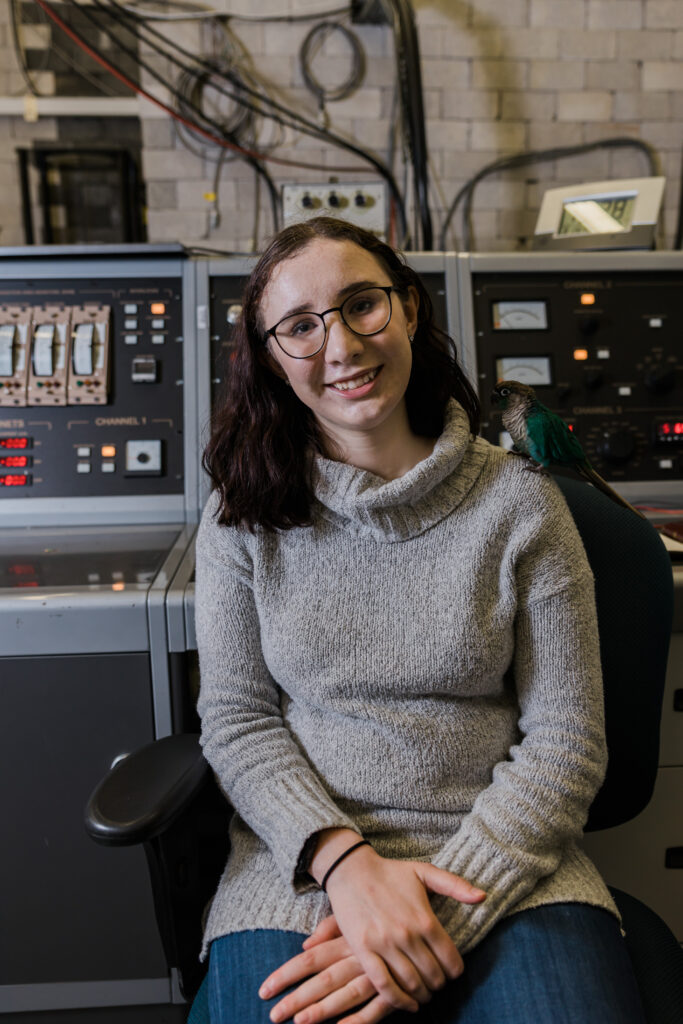

Patience Christi Yockey – Idaho National Laboratory

Artificial Intelligence (AI) is rapidly transforming grid operations, enabling applications in detection, prediction, control, optimization, and customer engagement. Yet, adoption is not without significant challenges. AI systems must remain secure and resilient against evolving cyber-physical threats while managing model drift effectively to maintain accuracy over time. Building trust and accountability requires robust governance, validation, and explainability frameworks—areas where industry standards are still emerging.

AI can fail in grid applications due to data issues, cyber threats, autonomy risks, lack of transparency, and integration challenges. Resolving these failures becomes more challenging due to AI’s non-deterministic nature, where troubleshooting and identifying root causes are often obscured by models that are unexplainable or difficult to interpret. For example, an AI system used in load forecasting applications can cause misprediction during unprecedented heatwaves, causing over-generation. At the same time, human-AI collaboration demands research to optimize decision-making and reduce error susceptibility, ensuring that humans and machines complement rather than conflict with each other. Risk and liability remain unclear in the event of operational failures, making insurance practices and multi-stakeholder dialogue essential for responsible deployment.

To address these challenges, Idaho National Laboratory (INL) has launched industry-driven initiatives including Trustworthy AI for Grid Resilience (TAIGR), Cognito, Artificial Intelligence Management and Research for Advanced Networked Testbed Hub (AMARANTH), Operational Resilience for Critical Hardware and Infrastructure Under Drift (ORCHID), and Cyber-Informed Engineering (CIE) focused on AI fitness for purpose and governance. This presentation will discuss key questions through these programs such as:

1. How do we ensure that the processes selected for AI implementation are ready and risk aligned?

2. How can we ensure AI systems remain secure and resilient against evolving threats and model drift?

3. What governance, validation, and explainability frameworks are needed to build trust and accountability in AI decisions?

4. What specific safeguards and design strategies can prevent unintended consequences of autonomation or autonomy in critical systems?